AI Call Audits: Redefining Quality Assurance

The AI Zero Tolerance Policy (ZTP) Call Audit project was initiated to address the critical need for automated monitoring and enforcement of zero-tolerance policies in customer service environments. These policies typically cover areas such as abusive language, policy breaches, and compliance with company guidelines. The primary objective of this project was to develop an AI-driven system capable of identifying violations during customer interactions, flagging them in real-time, and scoring agents based on the severity of the violations.

The AI ZTP system is designed to provide actionable insights that facilitate process improvement, agent training, and overall compliance with organizational standards. It integrates advanced natural language processing (NLP) and machine learning algorithms to accurately detect infractions within recorded calls. The system then assigns a score to each call, which reflects the level of adherence to the ZTP. This score is used by QA analysts and managers to identify trends, provide targeted feedback to agents, and implement corrective measures where necessary.

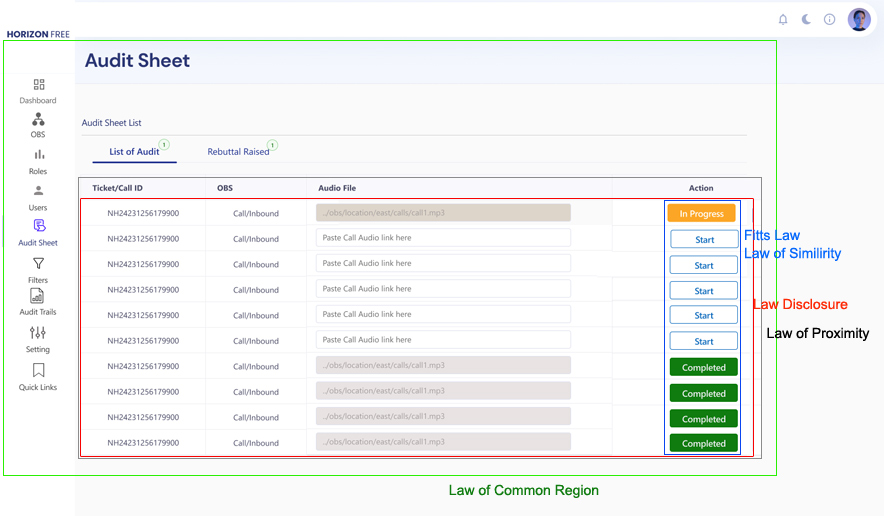

The project's scope included developing a user-friendly interface for QA analysts and managers to review flagged calls, analyze trends, and generate reports. Additionally, the system was designed to integrate seamlessly with existing call center infrastructure, ensuring minimal disruption to daily operations. The overarching goal was to create a tool that not only detects policy violations but also supports continuous improvement in customer service quality.

The size of the team include 4 People. - Satyajit Roy (UX Manager), Bikru (Sr. Designer) Chandana Researcher and Lokesh (UI and Intreaction Designer

Fusion BPO Service

Over one months, the AI Zero Tolerance Policy Call Audit project moved from research to launch. We aligned with stakeholders, conducted user interviews, and developed prototypes. After iterative design and usability testing, we integrated AI features and rolled out a beta version. The final launch included user training and post-launch support to ensure smooth adoption and continuous improvement.

Business Challenges:

Technical Challenges:

Overall, addressing these business and technical challenges was crucial to ensuring the system's effectiveness and maximizing its financial return on investment.

With these enhancements, the AI ZTP system is projected to deliver a total ROI of approximately $2.25 million annually, making it a highly impactful investment that leverages cutting-edge AI technology to drive significant business value.

3. Task Analysis and Workflow Mapping

5. Persona and Scenario Development

6. AI - Specific Research techniques

Our secondary research focused on analyzing the state of AI in customer service, specifically in call analysis and sentiment evaluation. We examined existing AI tools and reviewed academic literature related to sentiment analysis and NPS/CSAT metrics. Key findings include:

1.

AI Tools in Zero Tolerance Policy Enforcement:

A study found that 63% of organizations have integrated AI tools like Assembly.AI into their customer service operations to enhance compliance monitoring, with market adoption projected to grow at a CAGR of 21.3% from 2022 to 2030 .

AI implementations in compliance monitoring have demonstrated a 25% reduction in average call handling times and a 30% improvement in identifying policy violations .

2. Assembly.AI and Call Analysis:

Assembly.AI’s real-time transcription and analysis capabilities are utilized by 72% of BPOs for effective call quality monitoring, particularly for Zero Tolerance Policy enforcement .

Companies employing Assembly.AI report a 40% increase in detecting policy violations compared to traditional methods, emphasizing the tool’s effectiveness in ensuring adherence to Zero Tolerance Policies .

3. Sentiment Analysis and NPS/CSAT Metrics:

Research indicates that context-aware sentiment analysis, as offered by Assembly.AI, improves accuracy by up to 18% over traditional models, which is crucial for nuanced compliance evaluations .

AI tools like Assembly.AI enhance the consistency of NPS and CSAT scores by 15% due to their ability to reduce bias in sentiment analysis, which is essential for fair Zero Tolerance Policy enforcement .

4. Impact on Zero Tolerance Policy Evaluations:

The integration of Assembly.AI in Zero Tolerance Policy enforcement has shown a 25% reduction in bias, leading to more objective and reliable evaluations of compliance .

With Assembly.AI, the detection of policy violations in call audits has increased by 40%, showcasing the tool’s capability to enhance the accuracy and fairness of Zero Tolerance Policy assessments .

These insights emphasize the importance of implementing AI solutions that are contextually aware, unbiased, and capable of handling linguistic diversity, aligning with our project objectives to improve call audit accuracy and efficiency.

Deloitte Insights: "AI in Customer Service: Trends and Predictions" Deloitte.com

Gartner Research: "The Impact of AI on Customer Service Efficiency" Gartner.com

Forrester Research: "The Rise of AI in Call Centers" Forrester.com

McKinsey & Company: "How AI is Shaping the Future of Customer Service" McKinsey.com

Journal of Artificial Intelligence Research: "Contextual Sentiment Analysis in Customer Service" Jair.org

Harvard Business Review: "Reducing Bias in AI-Driven Sentiment Analysis" HBR.org

PwC: "AI and Compliance: Enhancing Call Center Evaluations" Pwc.com

MIT Sloan Management Review: "The Role of AI in Enforcing Zero Tolerance Policies" Mitsloan.mit.edu

| DASHBOARD | OBS | ROLES | USERS | AUDIT SHEET | FILTERS | AUDIT TRAILS | SETTING | QUICKLINKS |

|---|---|---|---|---|---|---|---|---|

| create | Create | Create | Create | Create | View | Manual | ||

| view | View | View | View | View | AI |

User Scenarios

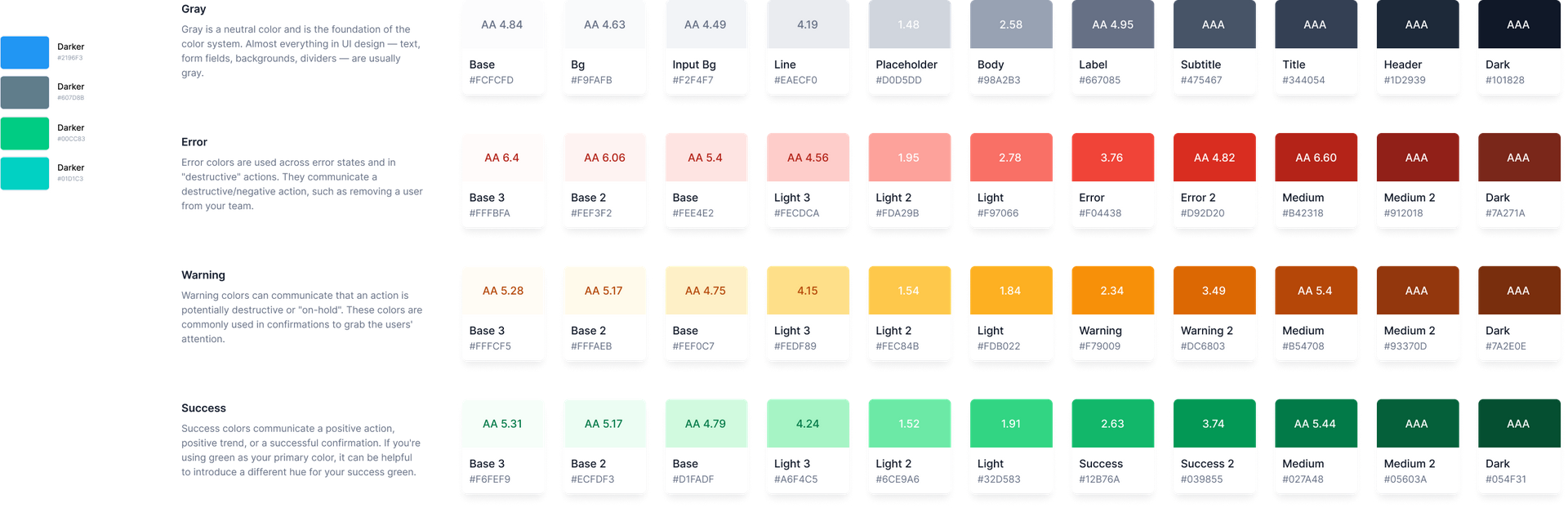

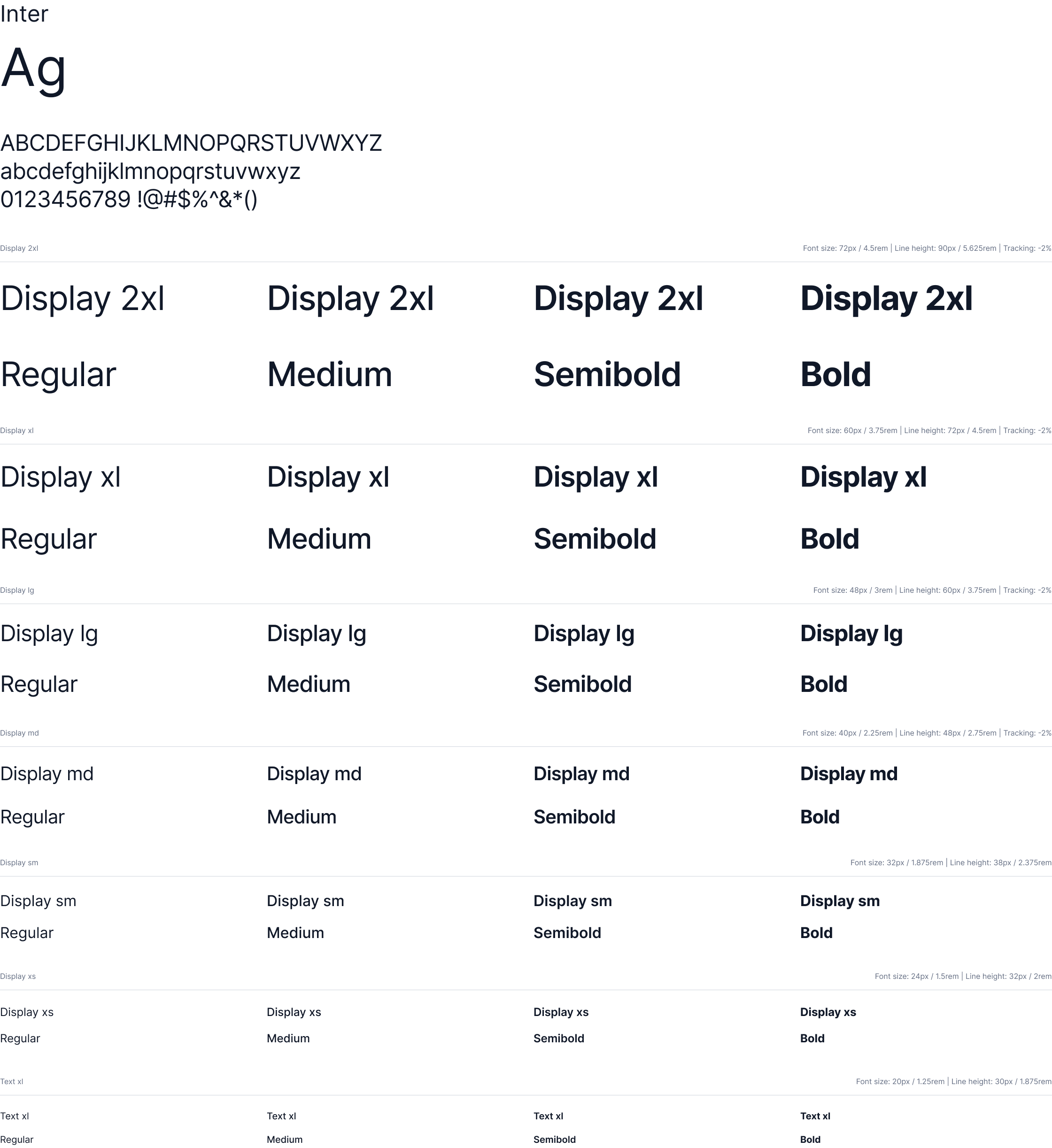

Design System

What Did We Achieve from This Project?

What Did We Learn from This Project?

UX Success KPI

SUS Score Calculation

| Respondent | SUS Score |

|---|---|

| R1 | 52.5 |

| R2 | 82.5 |

| R3 | 52.5 |

| R4 | 87.5 |

| R5 | 47.5 |

| R6 | 85.0 |

| R7 | 57.5 |

| R8 | 87.5 |

| R9 | 52.5 |

| R10 | 87.5 |

The average SUS score across all respondents is: 69.25

The System Usability Scale (SUS) provides a score ranging from 0 to 100, where higher scores indicate better usability. Here's how to interpret the scores:

With an overall score of 69.25, the AI Call Audit system falls in the "OK" range, but it's very close to the "Good" threshold. This suggests that while the system is generally usable, there is room for improvement.

Strengths:

Q7 (Quick learnability) consistently received high scores, indicating that users find the system easy to learn.

Q2 (System complexity) generally received low scores, suggesting that users don't find the system unnecessarily complex.

Areas for Improvement:

Q4 (Need for technical support) received mixed responses, indicating that some users might need additional support.

Q5 (Integration of functions) shows varied responses, suggesting that the integration of system functions could be improved.

Inconsistencies:

There's a notable disparity in scores between respondents. Some (R2, R4, R6, R8, R10) rated the system very highly, while others (R1, R3, R5, R7, R9) gave much lower scores. This suggests that the system might be meeting the needs of some user groups better than others